In March, the International Baccalaureate (IB) canceled May 2020 Diploma Programme (DP) in-person exams due to the dangers posed by the Covid-19 pandemic. This required a significant shift in the way students' IB results were calculated. On the 13th of May 2020, the IB reported the following guidelines for determining May 2020 results:

"Following the submission of the [Internal Assessment coursework and predicted grades] the IB will be using historical assessment data to ensure that we follow a rigorous process of due diligence in what is a truly unprecedented situation. We will be undertaking significant data analysis from previous exam sessions, individual school data and subject data.:

According to various international media reports, the shift in how results are determined has created confusion and frustration among students and schools.

The challenges of May 2020 results, however, provide schools with an ideal opportunity to improve outcomes for all students. There are enormous lessons to be learned from May 2020 IB DP results. The first step is gaining an understanding of how your scores were determined.

Through a dynamic data visualization tool and evidence from our statistical analysis, IBSchoolImprovement.com has determined that scores are most likely based on three factors: predicted grade, the Internal Assessment score and a school's history of accurately predicting grades.

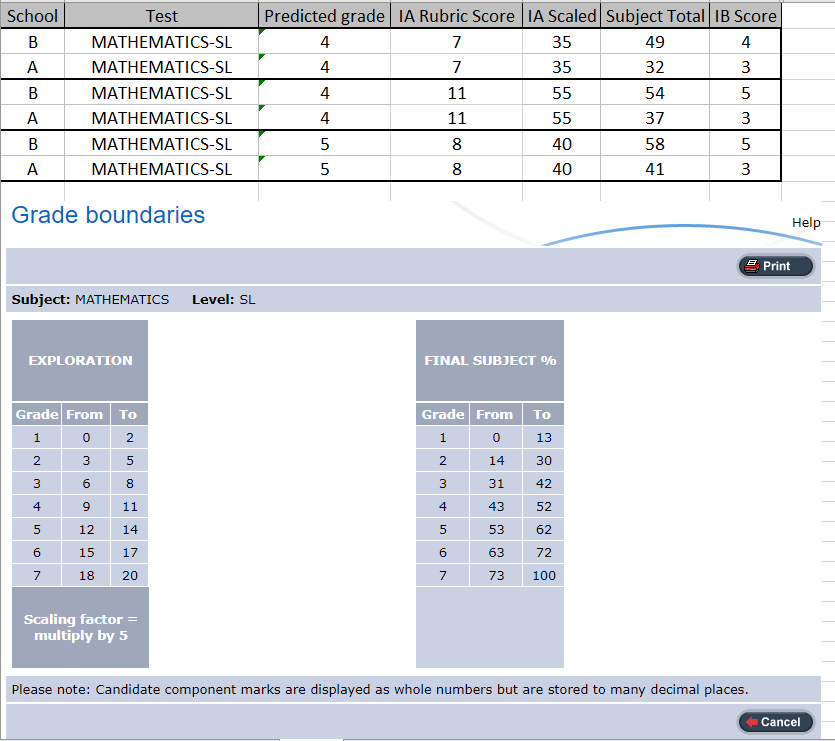

The IB Mathematics SL example below displays three categories of students from school A and B. Within each category students received the same predicted grade and same Internal Assessment score; however, their final result score was dramatically different. Why?

The answer lies in understanding each school's accuracy in predicting students' grades in previous examination years.

If a school (like school A) has a history of over prediction (predicting students higher than they actually scored in previous years) then the scaled score of the Internal Assessment will be reduced (in some cases dramatically) and the student will receive an IB final mark that is lower than the Predicted Grade.

If a school (like school B) has a history of accurate or under prediction than the scaled score of the Internal Assessment will remain the same or be increased.

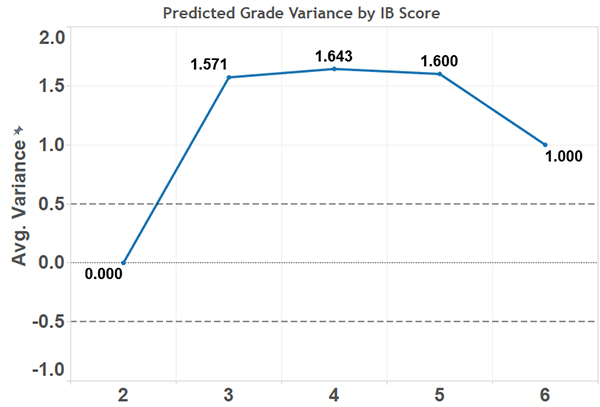

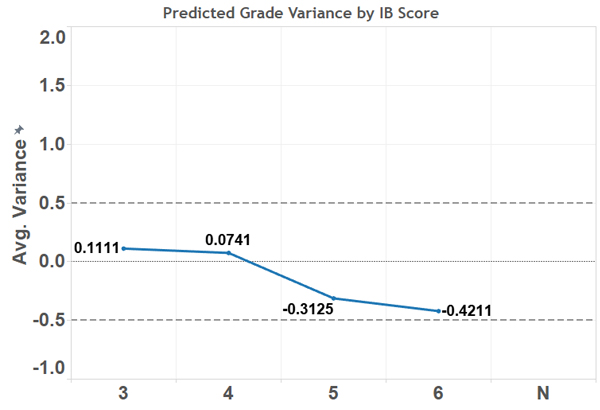

Compare the prediction history of school A and B below to understand the scoring pattern

School A - History of Over Prediction

School B - History of Over Prediction

The IBSchoolImprovement.com's Data Visualization Tool will provide you with detailed insight to how your school's subject scores were awarded in 2020. This analysis will provide you with historical data to understand to what extent your team's were accurate in predicting students results from 2016 to 2020. In addition, this tool will indicate exactly how your May 2020 scores were scaled.

The simple point is that if your team's predictions are historically inaccurate, then teachers are not providing students with valuable feedback on in-class assessments. The point is not to criticize teams - quite the opposite - the point is to empower teachers with actionable information that leads to the development of intellectual skills which serve as the foundation for IB exam and post-secondary success.

How will you utilize an in-depth understanding of your May 2020 and historical IB results to improve classroom practices? IBSchoolImprovement.com is eager to collaborate with you to inspire success in the challenges of the 2020-2021 school year.

If you found this article interesting and would like to receive more articles like this, sign up for our newsletter here.